Reimagining Search with Generative AI:

How We Built a Real-Time, Intent-Aware News Answering Engine (#AskDH)

The Starting Point: A Vision for Smarter Search

At our core, we’re a technology-driven Enterprise SaaS company, powering some of the most dynamic digital platforms. Our mission has always been simple: make information more accessible, contextual, and actionable for everyone.

As part of our innovation roadmap, Generative AI emerged not just as a strategic lever but as the future foundation of how we deliver value. We envisioned embedding intelligence into every user interaction - removing friction, enabling personalization, and driving trust at scale.

This vision gave birth to #AskDH, our real-time, intent-aware news answering engine, designed to go beyond search to understand, contextualize, and converse.

The Problem: Traditional Search Wasn’t Enough

Search is at the heart of user engagement. Yet, our existing search infrastructure rooted in lexical search techniques was showing its cracks:

The result? Laggy, inaccurate, and frustrating experiences that eroded trust. For a platform where speed and precision are everything, this was a P0 engineering priority.

Why It Mattered

Search is the foundation layer on which all downstream generative experiences summarization, synthesis, recommendations are built. If retrieval is noisy or slow, users disengage.

Even small inefficiencies in query understanding, retrieval, or reranking cascade into:

This wasn’t just a technical issue. It was a business-critical challenge, impacting user satisfaction, adoption rates, and ultimately, our ability to scale.

The Turning Point: Generative AI

We saw an opportunity to reinvent search itself.

Instead of treating queries as strings of text, what if we treated them as expressions of intent, contextual, multilingual, time-sensitive, and dynamic?

That question became the blueprint for our Generative AI–powered Real-Time News Answering Engine (#AskDH).

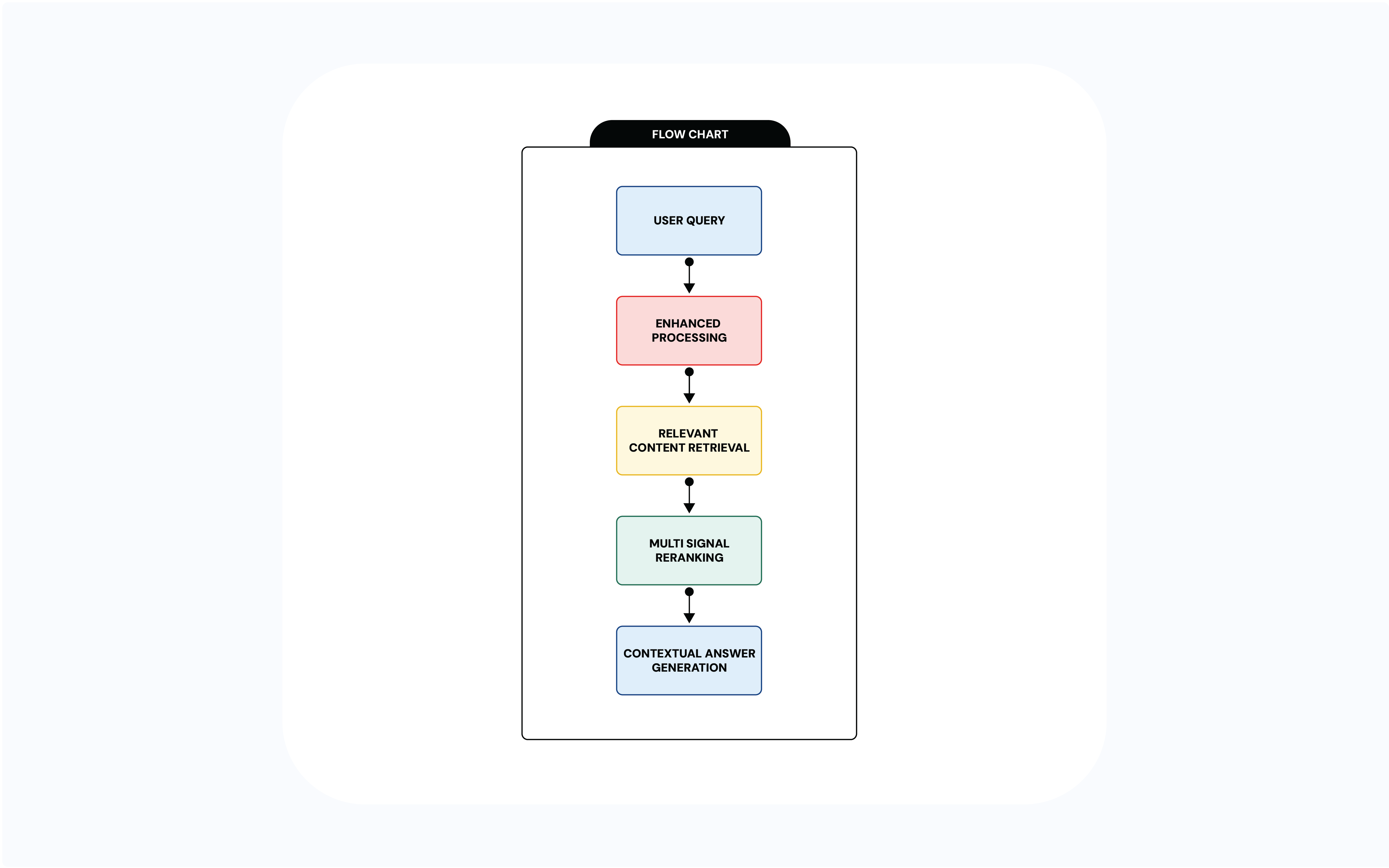

The Architecture: Real-Time, Intent-Aware, and Multilingual

The solution was designed as a hybrid Retrieval-Augmented Generation (RAG) pipeline, optimized for speed, trust, and explainability.

The Tech Stack Behind the Magic

This combination allowed us to deliver fast, scalable, and explainable AI-powered search without compromising trust or latency.

Overcoming Security & Governance Hurdles

Given the sensitivity of user queries and news content, security and compliance were embedded from day one:

We balanced innovation with responsibility, ensuring the system is trustworthy, transparent, and compliant.

The Impact: From Laggy Search to Instant Answers

Since deploying #AskDH, the transformation has been remarkable:

Both internal teams and end-users report a step-change in experience:

Looking Ahead

#AskDH isn’t just a feature, it’s a platform capability. By solving the hardest challenges in real-time, multilingual, intent-aware search, we’ve laid the groundwork for:

Generative AI didn’t just fix search. It redefined how our users connect with news faster, smarter, and more human.